Escaping the Trap of Point Solutions: Why Systems Thinking Is the Missing Link in AI Governance

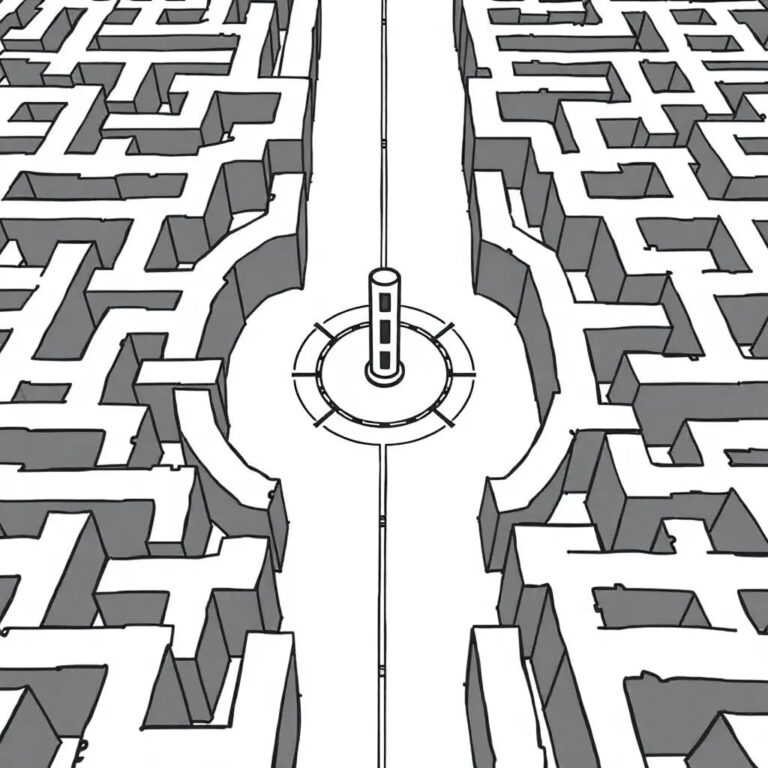

At the core of AI governance lies a challenge that is not technological but about understanding the complex interactions between human behavior, organizational structures, and emerging AI capabilities. Too often, efforts focus on a single aspect of the problem — a “point solution” — that does not address the underlying systemic issues.

The Pitfalls of Point Solutions

Consider common approaches to AI governance:

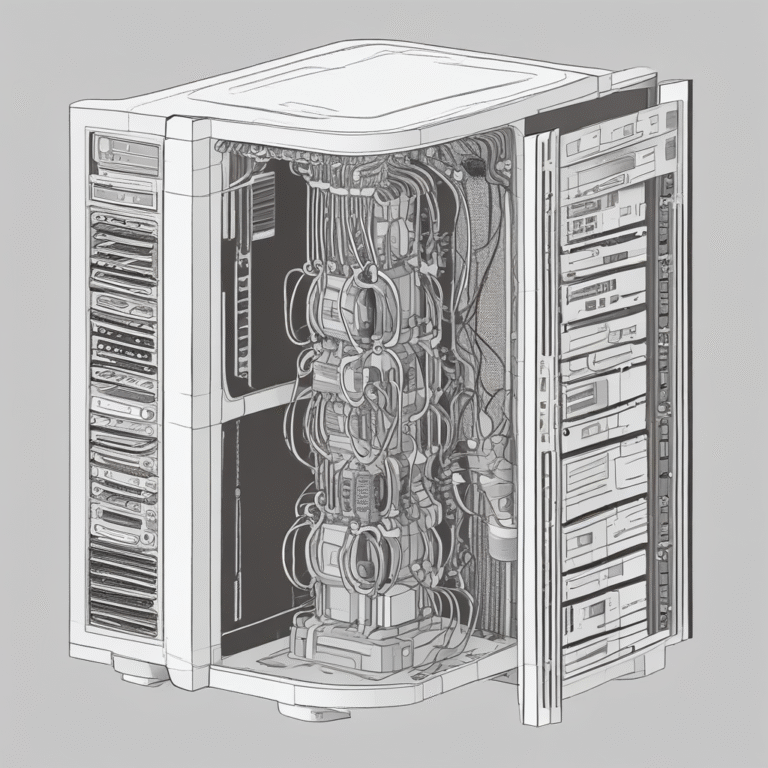

- Technical measures: Implementing ethical algorithms, bias detection tools, and explainability instruments. While crucial, these can be circumvented or become obsolete as AI evolves.

- Policies and regulations: Developing laws and guidelines for AI use. These often adapt slowly to rapid technological advances and can be difficult to enforce globally.

- Training and education: Equipping people with AI literacy and ethical awareness. This is vital but insufficient alone if organizational structures and incentives do not support responsible AI implementation.

Each of these is a valuable piece of the puzzle, but as standalone solutions, they are incomplete. For example, a bias detection tool might flag an issue, but if data collection practices, human biases in interpretation, or corporate pressures remain unchanged, the problem will likely re-emerge in another form.

The Power of Systems Thinking

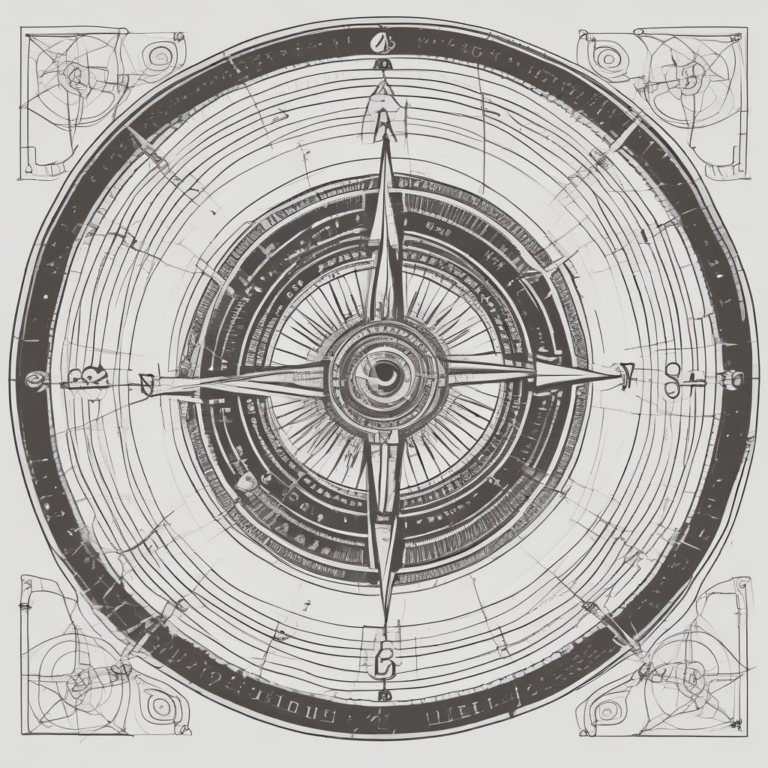

Systems thinking offers a more holistic and effective framework for AI governance. It involves:

- Understanding interconnections: Recognizing how different components of the AI ecosystem influence each other. This means looking beyond the algorithm to consider data pipelines, human operators, end users, and societal impacts.

- Identifying feedback loops: Observing how actions within the system create reinforcing or corrective cycles. For example, a poorly designed AI system might lead to user frustration, which in turn generates negative data, further degrading AI performance.

- Focusing on emergent properties: Understanding that the behavior of the entire system can be more than the sum of its parts. Ethical considerations, for example, involve not just individual AI components but how they collectively shape outcomes.

Applying Systems Thinking to AI Governance

To implement systems thinking in AI governance, organizations should:

- Map the ecosystem: Visualize all stakeholders, processes, and technologies involved in the AI lifecycle, from development to monitoring.

- Conduct impact assessments: Evaluate potential social, ethical, and economic consequences of AI systems on the entire ecosystem, not just within isolated technical parameters.

- Foster cross-functional collaboration: Bring together diverse teams—engineers, ethicists, policy makers, legal experts, social scientists, and business leaders—to ensure comprehensive understanding of challenges and solutions.

- Embrace iteration and adaptation: Design governance frameworks that are flexible and can evolve alongside AI technology and its integration into society. This includes continuous monitoring and learning from system performance.

Conclusion

The path to effective AI governance requires a fundamental shift from solving isolated problems to understanding and managing the complexities of the entire AI system. By embracing systems thinking, organizations can overcome the limitations of point solutions and build AI governance frameworks that are not only technologically sound but also ethically robust and socially responsible. This holistic perspective is not just better practice; it is the missing link to building trust and ensuring AI’s beneficial integration into our world.