Is Your Organisation’s AI a Hidden Threat? Unmasking the Dangers of Shadow AI

Somewhere in your organisation right now, someone is signing up for an artificial intelligence (AI) tool. Maybe it’s a marketing manager experimenting with content generation. Perhaps it’s a developer integrating an AI coding assistant. It could be a finance team exploring automated reporting.

They’re not being reckless. They’re being resourceful. They’ve found something that makes their work faster, easier, and better. They’ve connected it to your systems, granted it access to your data, and moved on with their day.

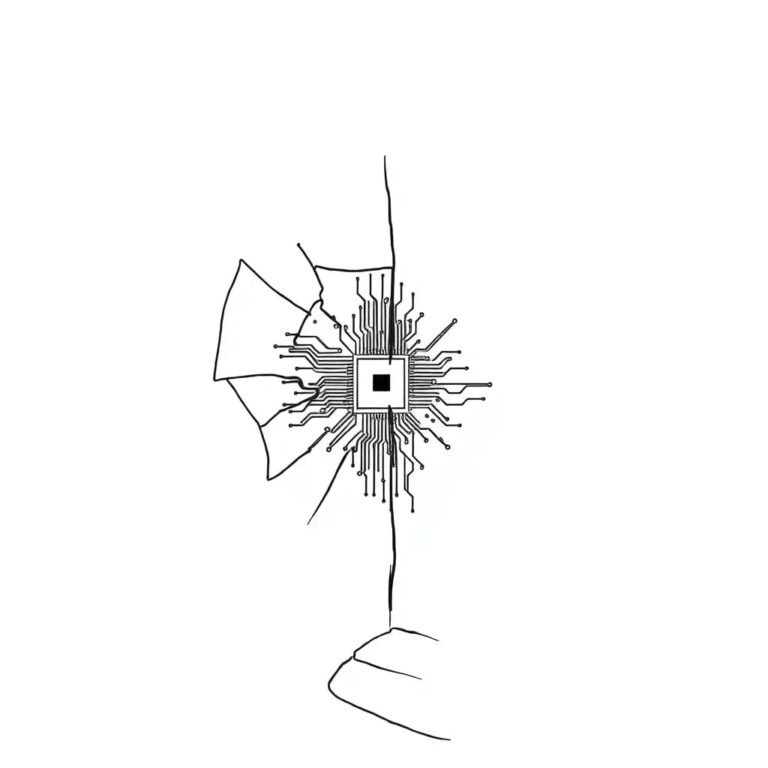

What they probably haven’t done is tell IT. And what IT almost certainly hasn’t done is bring that tool, and its associated identities, into your governance framework. This is shadow AI. And it’s creating an identity crisis that most organisations don’t yet realise they have.

The Accelerated AI Adoption

AI adoption across South African enterprises has accelerated dramatically. According to a January 2025 white paper by the World Economic Forum (WEF) and the University of Oxford’s Global Cyber Security Capacity Centre, much of current AI deployment is explorative or experimental, with organisations using smaller, use-case-based approaches that emphasise ideation and rapid implementation.

The problem isn’t the experimentation itself. Innovation requires freedom to explore. The problem is what happens next: Experiments become embedded within live business operations without the rigorous risk assessment, system testing, and governance oversight that formal deployments would demand.

The Risks of Shadow AI

Every AI tool that connects to your environment creates new identities. This includes API keys that authenticate requests, service accounts that access databases, and tokens that maintain persistent connections. These non-human identities multiply quickly, often without appearing on any register or falling under any governance policy.

In organisations where non-human identities already outnumber human users by 10:1 or more, shadow AI further accelerates this imbalance, entirely outside your security team’s visibility.

Traditional identity and access management was designed with a clear process in mind. Someone requests access, the request is evaluated, access is granted or denied, and the decision is logged. Shadow AI bypasses this entirely. There’s no request because nobody thinks to make one. There’s no evaluation because the tool seems harmless. There’s no logging because the connection happens outside sanctioned channels.

The Consequences of Unmanaged Identities

When the employee who set it up moves to a different role or leaves the company, the AI tool and its identities remain — orphaned, unmonitored, and potentially vulnerable. The WEF report highlights this risk directly: A lack of formal rollout programmes decreases transparency, which weakens management processes and leadership oversight. Lax software management amplifies the problem as AI gets introduced through unsanctioned browsers, plugins, and open-source tools that developers adopt without review.

Key Questions to Address

What starts with individual productivity becomes organisational exposure. Here’s a practical test. Can your organisation answer these questions with confidence?

- How many AI tools are currently connected to your systems?

- Which of these were formally approved and which arrived through informal adoption?

- What data can these tools access?

- What identities were created to enable these connections?

- Who owns these identities?

- When were the credentials last rotated?

- What happens to these connections when the employee who created them leaves?

In our work with South African enterprises, we find that most cannot answer even half of these questions. The gap here is infrastructure-related. The tools and processes designed to manage human workforce identities simply weren’t built for the sprawling population of machine identities that AI adoption creates.

Addressing Shadow AI

Addressing shadow AI requires starting with what you can control: visibility. You cannot govern what you cannot see, and you cannot see what you’re not looking for.

Discovery is the essential first step. Automated scanning that identifies AI integrations across your environment, both the authorised and the unauthorised alike. Many shadow AI deployments exist simply because there was no clear process for formal adoption. Making that process visible and accessible reduces the incentive to work around it.

Inventory follows discovery. Every AI tool, every associated identity, is mapped to a human owner and a business purpose. This creates accountability. When someone’s name is attached to an integration, the conversation about governance becomes natural rather than adversarial.

Policy must evolve to match reality. Comprehensive guidelines covering how AI can be used within the organisation, how new tools are vetted before implementation, and how the identities these tools create are managed throughout their lifecycle ensure that innovation doesn’t create unintended risk.

Finding Balance in AI Adoption

There’s a human element here, too. Employees adopt shadow AI because they see value in it. Blocking that adoption entirely pushes the behaviour further underground. The more effective approach combines enablement with governance.

This means ensuring IT administrators are educated and skilled enough to evaluate and manage AI tools. It means creating clear pathways for employees to request new tools, with response times that don’t drive them toward unofficial alternatives. It means recognising that AI adoption is inevitable and positioning your identity framework to accommodate it rather than resist it.

The organisations getting this right treat shadow AI as a signal, not just a threat. They understand where and why employees want AI solutions. These insights can inform strategic AI adoption that delivers productivity benefits while maintaining security and governance.

The Growing Complexity of Shadow AI

Shadow AI is a current reality that grows more complex with each passing week. Every new tool adopted without oversight, every API key created without governance, and every service account that exists outside your identity framework accumulate into risks that become harder to address the longer they’re ignored.

The good news is that the principles for managing this challenge already exist. Discovery, inventory, ownership, lifecycle management, and continuous monitoring are the same fundamentals that apply to any identity population. What’s required is to extend their reach to encompass the AI tools already operating in your environment.

The AI your team adopted last week has its own identity. The question is whether you’ll manage it proactively or discover it during an incident investigation.