The UK and the EU: Navigating the Complexities of AI Regulation

On February 3, 2026, the UK Information Commissioner’s Office (ICO) launched a formal investigation into xAI, specifically regarding the processing of personal data in the creation of non-consensual sexualized imagery by Grok. This investigation aims to determine whether Grok has complied with UK data protection law. Should xAI be found in violation, the ICO holds the authority to impose fines of up to £17.5 million or 4% of xAI’s annual global turnover, under the Data Protection Act 2018 and the UK GDPR.

This investigation coincides with actions taken by Ofcom under the Online Safety Act 2023, which criminalizes the sharing of intimate deepfakes without consent. Additionally, the Data (Use and Access) Act 2025 criminalizes the creation or request for deepfake content of adults in the UK, although it currently does not penalize the technology providers facilitating such content.

The legal landscape is further complicated by concurrent investigations in the EU, where French prosecutors raided xAI’s offices in Paris, and the European Commission initiated its own inquiry under the Digital Services Act. This aims to evaluate whether xAI has effectively mitigated systemic risks associated with Grok, including the spread of illegal content and gender-based violence.

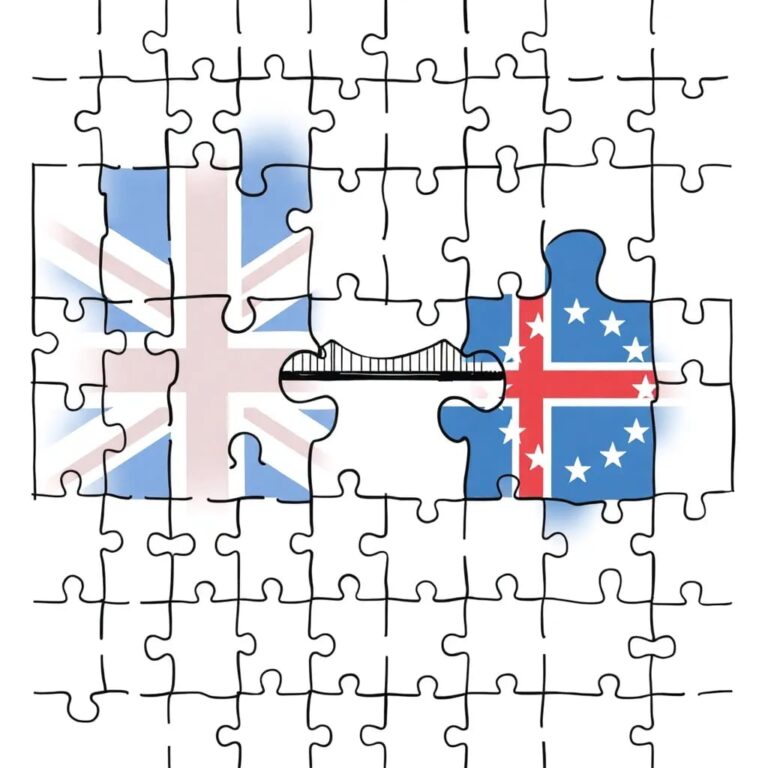

Diverging Regulatory Approaches

The contrasting enforcement strategies of the UK and EU highlight significant differences in their respective data protection and online safety regimes. The UK, with its common law tradition, emphasizes addressing specific harms through a reactive approach. In contrast, the EU aims to establish a preventive framework for systemic risk management, though this may slow their response to rapid developments in AI technology.

The Brussels Effect, a term coined by Anu Bradford, describes the EU’s approach to leverage its consumer market to set global regulatory standards. While effective during the era of surveillance capitalism, this method struggles to keep pace with AI advancements. The European Commission’s Digital Omnibus Regulation Proposal in November 2025 sought to delay certain provisions of the AI Act and amend the GDPR to facilitate AI model training on European data, reflecting a shift towards deregulation.

Transparency and Regulation of AI Content

Article 50 of the EU AI Act mandates transparency for content generated by generative AI, requiring it to be marked in a machine-readable format. The implementation of these rules has been proposed for 2027, a delay that might hinder immediate regulatory effectiveness. In contrast, the UK currently lacks any legal requirements for watermarking AI-generated images, although there is recognition of the potential benefits of AI labeling within the 2024 UK government consultation on Copyright and Artificial Intelligence.

Case Studies in Enforcement

The ICO and Ofcom have proactively addressed the deepfake issue by targeting both xAI and X, showcasing enforcement at multiple levels of the data cycle. This distinction between the two entities is critical, emphasizing the UK’s ability to respond swiftly to specific online harms.

In the realm of competition and antitrust, similar contrasts emerge. In 2023, the UK Competition and Markets Authority (CMA) blocked a $69 billion merger between Microsoft and Activision due to Microsoft’s substantial control over the cloud gaming market. While the European Commission approved the merger under certain conditions, the UK’s stringent requirements resulted in immediate structural changes to ensure market competition.

Biotechnology and Regulatory Burdens

In biotech and agri-tech, the UK enacted the Genetic Technology (Precision Breeding) Act 2023, simplifying regulations around genetically edited organisms compared to the EU’s more stringent requirements. This shift allows quicker market access for precision-bred organisms in the UK, contrasting with the EU’s comprehensive documentation and labeling requirements.

Conclusion: A Dual Approach to AI Regulation

The UK’s framework for addressing deepfake technology focuses on creating legal challenges for individual instances, while the EU emphasizes procedural failures in risk assessment prior to software deployment. Although the UK system may excel in addressing immediate harms, the EU’s regulatory approach could yield broader, long-term impacts on companies like xAI. Both systems possess unique advantages and can complement each other, potentially enhancing accountability in the evolving digital landscape post-Brexit.