Pentagon and Anthropic Clash Over AI Ethics in Military Operations

The Pentagon is reportedly considering severing its ties with Anthropic, an artificial intelligence company, due to disagreements concerning the deployment of AI technologies in military contexts. According to an Axios report, Anthropic has implemented certain restrictions on its AI models, which the Pentagon views as a significant hurdle.

Pressure on Leading AI Companies

Four leading AI companies, including Anthropic, OpenAI, Google, and xAI, are currently under pressure from the Pentagon to allow their models to be used for all lawful purposes. Key military areas of interest include weapons development and intelligence collection. Despite these pressures, Anthropic has maintained its ethical constraints.

Significant Operations and Ethical Constraints

Anthropic’s AI model, Claude, has been involved in significant operations, such as the capture of former Venezuelan President Nicolas Maduro. However, the company asserts that discussions with the U.S. government have primarily focused on usage policies, particularly the non-use of its AI models in fully autonomous weaponry and mass surveillance.

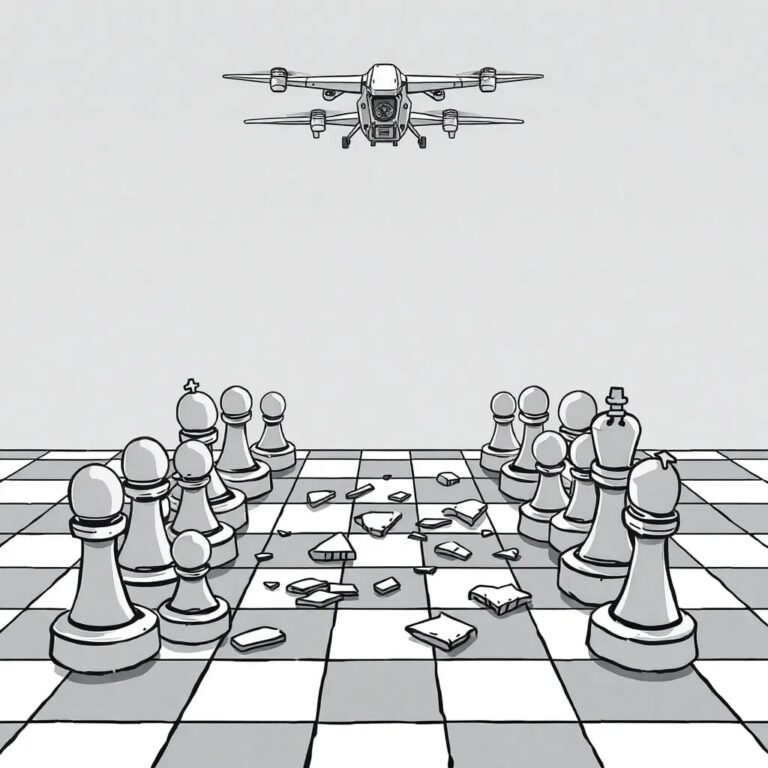

This clash underscores the ongoing tension between the advancement of military technology and the ethical implications of deploying AI in combat scenarios.