Scaling Enterprise AI in 2026 Requires Governance

As organizations gear up for the deployment of enterprise-scale AI in 2026, the emphasis has shifted from mere experimentation to establishing a robust framework for governance and security. This transition marks a significant move beyond isolated pilots, focusing on creating a comprehensive lifecycle system that encompasses data foundations, model development, deployment, and user interaction.

The Challenge Ahead

The foremost challenge in scaling AI is not the necessary infrastructure but rather the readiness in terms of skills and governance. While many organizations aspire to adopt AI, they often lack the essential security, risk, and compliance capabilities that are crucial for successful implementation. This gap results in limited visibility regarding:

- Where AI is utilized

- What data it consumes

- How models behave over time

- Who is accountable

Such limitations expose organizations to risks including data leakage, bias, misuse, and compliance issues.

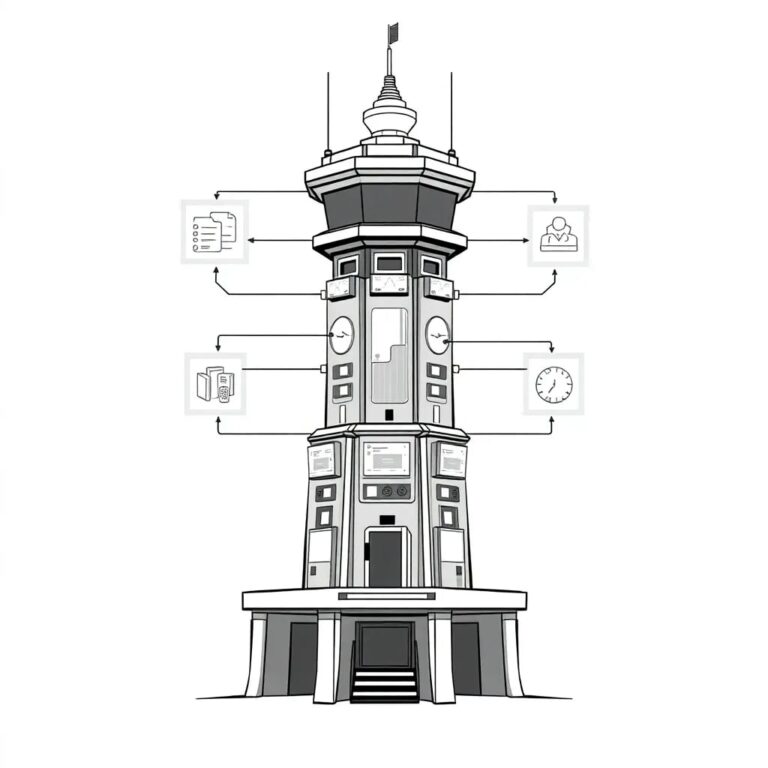

The Role of Value-Added Resellers (VAR)

Value-Added Resellers play a critical role in bridging the gap between innovation and enterprise-grade execution. By embedding cybersecurity, data governance, and responsible AI principles into the design and deployment of AI solutions, VARs can help mitigate risks from the onset.

Addressing Risks Early

When deploying AI for clients, it is imperative to address risks such as deepfakes, cyber fraud, and data privacy from the very beginning. This entails understanding:

- How and where AI is applied

- What data it interacts with

- How decisions are derived

By building safeguards early in the process, organizations can implement strong identity controls, ensure data validation, and establish continuous monitoring. These measures, paired with privacy-by-design principles, facilitate model governance and enhance explainability, which are essential for maintaining transparency, accountability, and trust.

Adopting Cloud-Native and Hybrid Architectures

To navigate the complexities of AI deployment, organizations are leveraging cloud-native and hybrid architectures. These frameworks not only provide the necessary speed and flexibility but also align with regulatory controls. This approach supports initiatives like Digital India and IndiaAI, promoting responsible, scalable, and compliant enterprise adoption across the nation.

In conclusion, as the landscape for enterprise AI evolves, the need for effective governance becomes increasingly clear. Organizations must prioritize these elements to harness the full potential of AI technologies responsibly and effectively.