AI, A2A, and the Governance Gap

Over the past six months, a recurring pattern has emerged across enterprise AI teams. The Agent2Agent (A2A) and Agent Communication Protocol (ACP) often dominate architecture reviews—showcasing elegant protocols and impressive demonstrations. However, just three weeks into production, concerns arise when someone questions, “Which agent authorized that $50,000 vendor payment at 2 am?” The initial excitement rapidly turns into apprehension.

The Paradox of Efficiency and Accountability

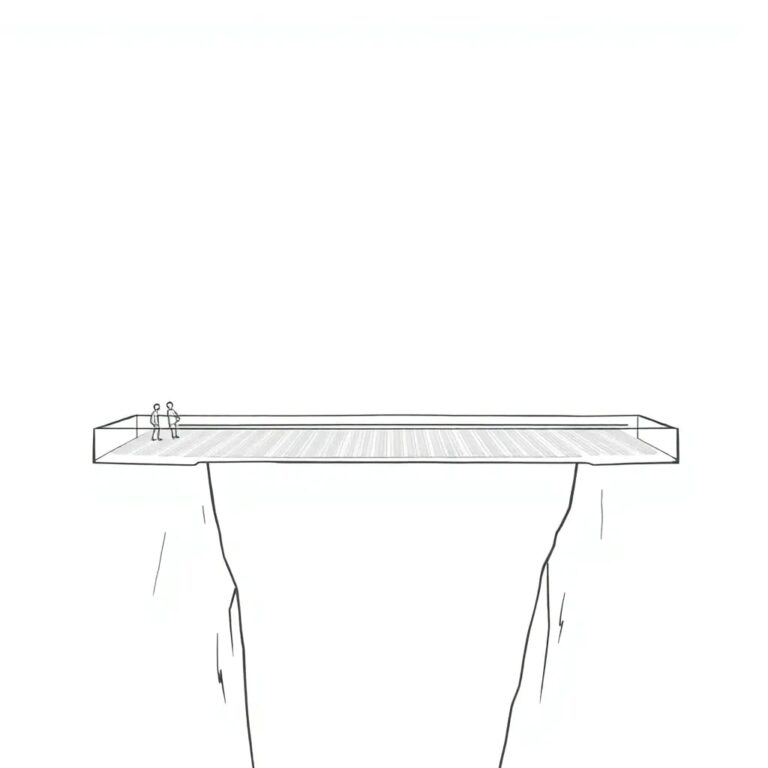

The paradox lies in the effectiveness of A2A and ACP at minimizing integration friction, which inadvertently removes the natural governance brakes that previously facilitated essential conversations. While the technical protocols are robust, the organizational protocols are conspicuously absent. Organizations find themselves transitioning from the question of “Can these systems connect?” to “Who authorized this agent to liquidate a position at 3 am?” This shift creates a significant governance gap: the capacity to connect agents is advancing faster than the ability to control their commitments.

The Evolution of the Agent Stack

To comprehend the rapidity of this shift, it is crucial to examine the evolving agent stack. A new three-tier structure is emerging, supplanting traditional API-led connectivity:

- Tooling: MCP (Model Context Protocol) – Connects agents to local data and specific tools — analogous to a worker’s toolbox.

- Context: ACP (Agent Communication Protocol) – Standardizes how goals, user history, and state move between agents — analogous to a worker’s memory and briefing.

- Coordination: A2A (Agent2Agent) – Handles discovery, negotiation, and delegation across boundaries — analogous to a contract or handshake.

This stack transforms multi-agent workflows into a configuration problem rather than a custom engineering project. However, this also leads to an expanded risk surface, often overlooked by Chief Information Security Officers (CISOs).

The Rise of Agent Sprawl

With enterprises struggling to govern hundreds of SaaS applications—averaging over 370 per organization—agent protocols do not simplify this complexity; they circumvent it. In the API era, human intervention was required to trigger system actions. Now, in the A2A era, agents utilize “Agent Cards” to discover one another and negotiate operations. The ACP allows for context-rich exchanges, enabling seamless transitions from customer support to fulfillment and partner logistics without human involvement. Consequently, the friction that once curtailed risk is now absent, leading to a proliferation of semi-autonomous processes acting on behalf of the organization.

Identifying the Governance Gap

This governance gap rarely manifests as a single catastrophic failure; rather, it is evident through a series of small, perplexing incidents where the dashboards indicate everything is “green,” yet the business outcomes are suboptimal. The protocol documentation may emphasize encryption and handshakes but often neglects the emergent failure modes of autonomous collaboration. These issues are not merely bugs; they are symptoms of an architectural framework lagging behind the autonomy enabled by the protocols.

Common Failure Modes

- Policy Drift: A refund policy encoded in a service agent may operate in conjunction with a partner’s collections agent via A2A, yet their underlying business logic might conflict. When issues arise, ownership of the end-to-end behavior becomes murky.

- Context Oversharing: Expanding an ACP schema to include “User Sentiment” for personalization can inadvertently propagate this data to every downstream agent, transforming local enrichment into widespread exposure.

- The Determinism Trap: Unlike REST APIs, agents are non-deterministic. A change in an agent’s refund policy logic—driven by an update in its underlying model—may render workflows dysfunctional, often without a version trace to diagnose the problem.

These failure modes are not anomalies; they stem from granting agents greater autonomy without upgrading the rules governing their interactions. The technical capability for agent collaboration has surpassed the organization’s ability to define appropriate collaboration boundaries.

The Need for an Agent Treaty Layer

To address these challenges, the introduction of an explicit “Agent Treaty” layer is essential. If protocols serve as the language of interaction, the treaty acts as the constitution governing those interactions. Governance must transition from peripheral documentation to policy as code.

Key Components of the Treaty Layer

- Define Treaty-Level Constraints: Rather than merely authorizing connections, outline specific scopes for each connection, detailing which ACP fields an agent can share and categorizing operations as “read-only” or “legally binding.”

- Version Behavior, Not Just Schema: Treat Agent Cards as primary product surfaces. Any change in the underlying model should trigger a version bump, necessitating a treaty review.

- Cross-Organizational Traceability: Implement observability mechanisms that not only track latency but also capture intent, creating accountability for agent commitments.

Designing this treaty layer requires a shift in mindset and collaboration across departments. Engineers must consider multi-agent game theory and policy interactions, while compliance teams need innovative tools to audit autonomous workflows that operate at machine speed.

The Urgency of Addressing the Governance Gap

Enterprises are at a pivotal point in their adoption journey. Three converging trends highlight the urgency of addressing the governance gap:

- Protocol Maturity: A2A, ACP, and MCP specifications have matured, leading organizations to move from pilot programs to production deployments.

- Multi-Agent Orchestration: The shift from isolated agents to agent ecosystems and workflows that span organizational boundaries is accelerating.

- Silent Autonomy: The blurring of lines between “tool assistance” and “autonomous decision-making” often goes unacknowledged, complicating governance.

The next 18 months will be critical in determining whether enterprises can preemptively address these challenges or face a wave of failures necessitating retroactive governance.

Practical Steps Forward

To mitigate risks associated with A2A and ACP, enterprises should take the following actions:

- Map the Context Flow: Tag every ACP field with a “purpose limitation” identifier to clarify visibility and data dependencies.

- Audit Commitments: Identify every A2A interaction involving financial or legal commitments, particularly those bypassing human approval.

- Code the Treaty: Develop a “gatekeeper” agent to enforce business constraints on protocol traffic, ensuring policy visibility and enforcement at runtime.

- Instrument for Learning: Capture data on agent collaborations, invoked policies, and shared contexts, using this information for ongoing governance adjustments.

Implementing these strategies will yield a scalable pattern for agent deployments, enhancing accountability. If successful, organizations will have a framework for managing their agent ecosystem effectively. If not, they will gain invaluable insights into their architecture before issues arise in production.

As we transition from treating APIs as products to viewing autonomous workflows as policies encoded in agent interactions, it is imperative to establish the necessary governance frameworks now. The protocols are ready, but organizational structures must evolve concurrently. The Agent Treaty represents a critical step towards ensuring that policy is both machine-enforceable and adaptable.

The sooner organizations begin this journey, the sooner they can close the governance gap.