2026 Predictions: AI Is Breaking Identity and Data Security

Artificial intelligence is fundamentally altering organizational workflows and how risk materializes. What once felt experimental is now operational: generative AI in the hands of employees, autonomous agents executing workflows, and sensitive data moving across SaaS and generative AI applications, cloud environments, on-premises systems, endpoints, and emails at machine speed.

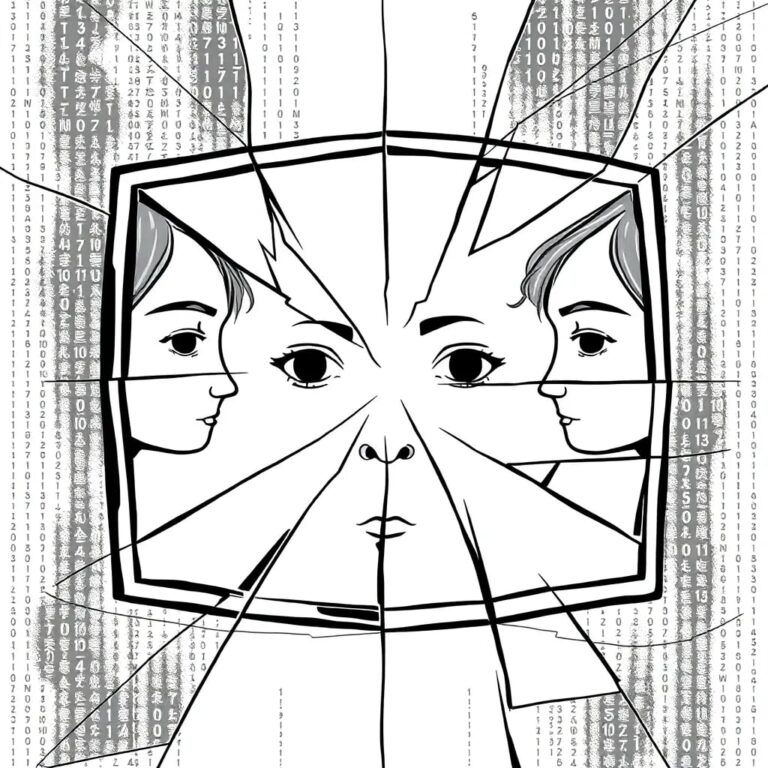

In 2026, security leaders are being forced to confront a hard truth. In an AI-driven world, treating data and identity as isolated silos will undermine existing models, creating blind spots that are easy to miss and hard to manage.

Historical Context

Historically, identity and data lived in separate domains—different teams, different tools, different success metrics. Identity answered who could access systems, while data security focused on what was being accessed. Together, they defined when and where access occurred.

AI breaks that model. AI agents operate across identity and data simultaneously. They act, generate, analyze, and transmit sensitive information, sometimes without a human in the loop. Risk no longer fits neatly into identity and access management or data loss prevention alone.

Cybersecurity Trends for 2026

1. Security Will Return to First Principles: Identity and Data

As environments grow more complex, security will come full circle. Organizations will realize that most modern controls are abstractions layered on top of two foundational truths: who or what is acting and what data is being used. Networks, perimeters, and destinations will matter less than identity and data context.

Identity defines intent and accountability. Data defines value and risk. Everything else becomes an implementation detail. This change won’t be nostalgic; it will be pragmatic. AI introduces too much speed and autonomy for surface-level controls to keep up, forcing security teams to rethink their anchors.

2. AI Will Break Deterministic, Rule-Based Risk Models

Traditional security assumes predictable behavior. But AI is a non-deterministic wild card. AI agents and systems learn, adapt, and evolve, introducing risks that static policies and Boolean logic can’t manage. Organizations will move toward adaptive risk models that evaluate behavior, identity—human, non-human, AI systems—and data sensitivity in real time, factoring in context rather than relying on predefined assumptions.

3. CISOs Will Move from Gatekeepers to Enablers of Trusted Autonomy

The role of the CISO is changing and this will accelerate in 2026. For years, security leaders were positioned as gatekeepers, responsible for saying no to risky behavior. In the era of AI, that posture won’t scale. The mission will be to guide innovation safely, focusing on designing trust into systems that enable businesses to thrive.

4. Agentic AI Adoption Will Outpace Its Reliability

Agentic AI will move from experimentation to execution faster than most organizations expect. In 2026, AI agents won’t just assist users; they’ll provision access, move data, generate content, and make decisions on behalf of the business. Adoption will accelerate due to real value, but reliability will lag, leading to frequent, subtle failures.

5. AI Regulation Will Arrive: Imperfect, but Unavoidable

In 2026, AI regulation will become a reality. Federal, state, and local governments will introduce guidelines focused on accountability, explainability, and data protection. These rules won’t dictate architectures but will demand clearer answers to basic questions: Who acted? What data was used or exposed? Why was a decision made?

The Bottom Line: Automation Is the Future of Security

As we kick off 2026, the lines between user and agent, access and action, data content and context, and traditional IT environments will continue to blur. What matters isn’t visibility or control in isolation. It’s understanding risk continuously and in real time. Identity and data are no longer separate problems; they’re part of the same challenge and must be part of the same solution.

Companies that embrace convergence and enable innovation with trust will unlock a new era of resilience, productivity, and innovation for their overall business. In 2026, security leaders will be at the helm of this transition.