Kiteworks Warns AI Security Gaps Leave Energy Infrastructure Exposed to Nation-State Attacks

New research from Kiteworks has identified several risks emerging across the energy and utilities sector in 2026. The findings indicate that AI red-teaming gaps leave operational systems untested against nation-state threats, coupled with weak centralized monitoring that allows attacks to persist undetected. Organizations are also facing extended incident response dwell times due to the absence of AI-specific playbooks, while limited board-level engagement continues to delay necessary security investments.

Compounding these issues are encryption gaps in AI training data that expose sensitive operational intelligence. The research underscores how early-stage AI governance remains insufficient in the sector. Only 9% of energy organizations report conducting AI red-teaming, while just 14% maintain AI-specific incident response playbooks, leaving critical systems vulnerable to increasingly sophisticated adversaries.

Predictions for 2026

During 2026, Kiteworks predicts that nation-state actors will exploit red-teaming gaps to compromise critical infrastructure AI. Weak centralized monitoring will leave AI attacks undetected until physical impacts occur. Incident response gaps will extend AI compromise dwell time and damage, while board underattention to AI governance will delay critical security investments. Additionally, encryption gaps will expose AI training data containing grid operations intelligence.

Kiteworks recognizes the sector’s strengths in point controls, dataset access controls, isolated training environments, and privacy impact assessments. However, it notes that the energy and utilities sector trails in centralized monitoring, adversarial testing, and incident response capabilities necessary to defend AI systems against nation-state actors and sophisticated threat groups.

Critical Vulnerabilities

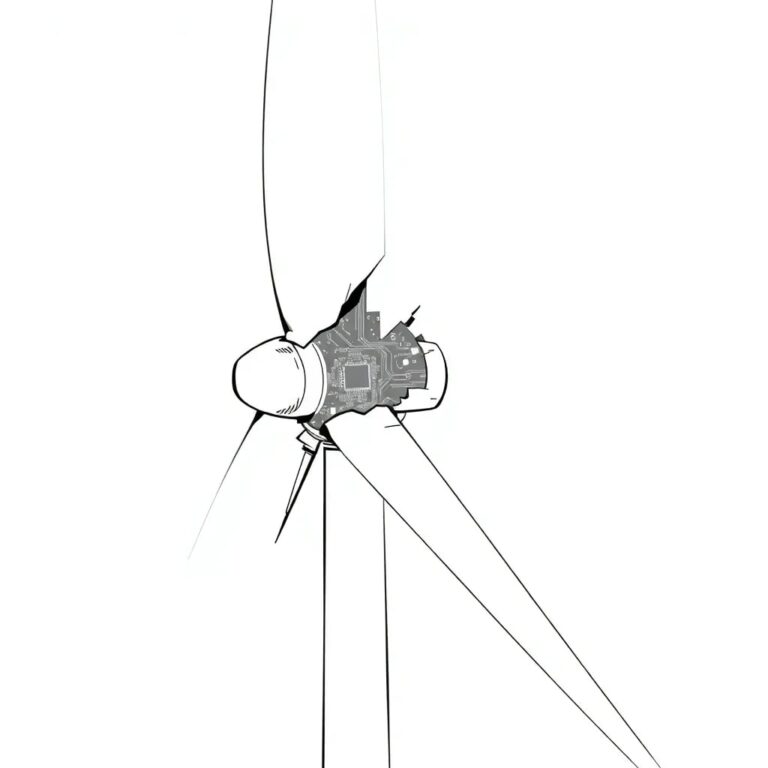

The report highlights that the red-teaming gap is the most dangerous vulnerability for energy. At just 9%, the sector trails the global average by 15 points and sits far behind defense and security (55%), technology (34%), and financial services (30%). For critical infrastructure increasingly dependent on AI for grid optimization, predictive maintenance, demand forecasting, and pipeline monitoring, this represents an invitation to sophisticated adversaries.

With 91% of energy organizations never conducting AI red-team exercises, adversaries face untested attack surfaces. Prompt injection attacks on grid management AI, adversarial inputs to pipeline monitoring systems, and model poisoning in predictive maintenance remain unexplored in the vast majority of energy organizations.

Recommendations for Improvement

Organizations must focus on establishing AI red-teaming programs immediately, especially for AI systems interfacing with operational technology. Engaging specialized red-team services with critical infrastructure experience is crucial. Testing should cover prompt injection, adversarial inputs, model poisoning, and data extraction across grid management, pipeline monitoring, and predictive maintenance systems. This is a national security priority, not just an organizational one.

The AI data gateway gap reveals energy’s architectural vulnerability, with only 18% adoption trailing the global average by 17 points while dataset access controls lead at 50%. This pattern shows that while the sector has implemented controls at individual systems and datasets, it hasn’t built the centralized visibility layer necessary to aggregate signals across the AI environment.

Sophisticated adversaries do not attack single systems in isolation; they move laterally, compromising multiple components and masking their activity across distributed infrastructure. Energy’s point-control approach may detect anomalies at individual assets, but without centralized gateway monitoring, organizations cannot correlate signals across systems to identify coordinated attacks before they achieve physical impact.

The Need for Incident Response Playbooks

At just 14% AI incident playbook adoption, energy trails the global average by 13 points. When AI system compromises occur, 86% of energy organizations will be building their response in real time. The implications for critical infrastructure are severe, as extended dwell time in energy AI systems allows adversaries to study grid operations and identify cascading failure points.

Organizations must develop AI-specific incident response playbooks for critical infrastructure contexts, documenting response procedures for model poisoning, adversarial manipulation, data exfiltration, and coordinated attacks on grid management systems. Conducting tabletop exercises with scenarios specific to energy AI threats would enhance preparedness.

Elevating AI Governance

While energy boards show appropriate attention to regulatory compliance (50%) and OT/IoT security (23%), AI governance attention at 32% trails the global average by 14 points. The sector must frame AI governance as a critical infrastructure protection priority, connecting AI security investments to grid reliability, public safety, and national security outcomes.

Finally, organizations should implement defense-in-depth data protection for AI training data. Closing the 12-point encryption gap requires encrypting all training data containing grid operations intelligence, with formal classification and protection of AI training datasets.