The EU’s AI Act: A Comprehensive Analysis

The European Union Artificial Intelligence Act of 2024 represents a significant legislative effort to regulate the rapidly evolving field of artificial intelligence (AI). However, the Act has been criticized for its limitations, particularly in addressing key areas of AI development and implementation.

Background and Context

First conceptualized in 2021, the Act was finally enacted in 2024, drawing attention from stakeholders across various sectors involved in AI. The legislation has provoked a dichotomy of opinions: it is viewed as either too little too late or too much too soon.

As the EU attempts to legislate AI, it faces the risk of falling behind in innovation compared to countries like the United States, China, and Russia. The rapid pace of technological advancement in places like Silicon Valley, where new AI firms are emerging at an unprecedented rate, raises concerns about the EU’s competitive position.

Recent Developments in the U.S.

In 2024, California’s State Assembly presented and passed SB 1047, an AI safety bill targeting larger AI models and the companies behind them. However, this bill was vetoed by Governor Gavin Newsom, who expressed concerns that focusing solely on larger models might overlook the potential dangers posed by smaller, specialized AI systems.

Scott Wiener, a co-author of SB 1047, highlighted the troubling reality that without binding restrictions, companies developing powerful AI technologies operate without meaningful oversight, particularly due to a lack of federal regulation.

Implications of California’s Example

The failure to enact AI regulatory legislation in California raises alarms that other states and possibly federal authorities may follow suit. This ongoing struggle reflects the historical tension between federal and state governance in the U.S.

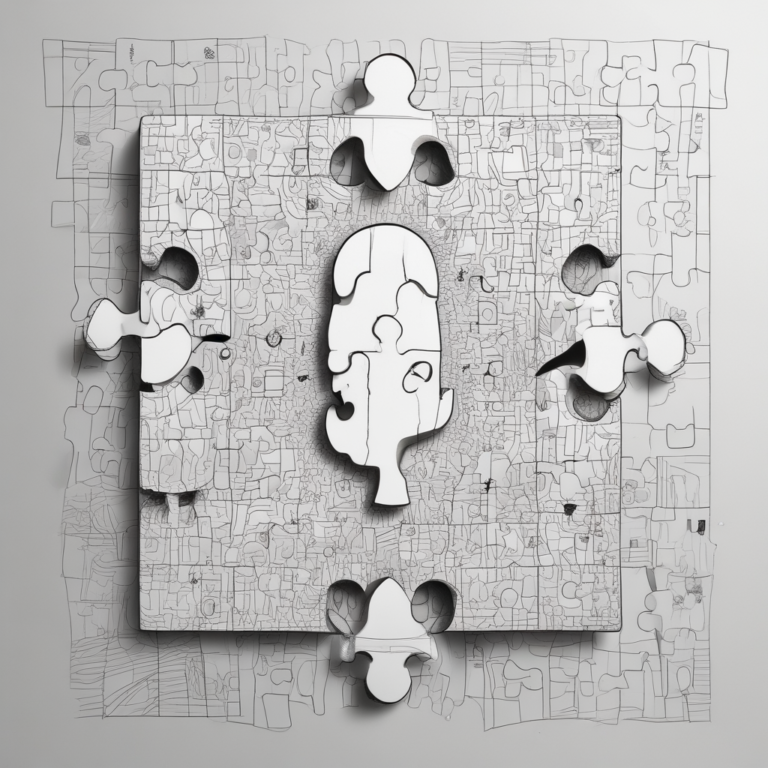

Understanding the AI Lifecycle

A critical analysis of the EU Artificial Intelligence Act reveals gaps in its coverage of the AI lifecycle. The Act only addresses three of the five essential stages:

- Basic theory

- Architecture design

- Data

- Algorithmic coding

- Usage

While the Act covers data, algorithmic coding, and usage, it misses the first two stages—basic theory and architecture design—which are crucial for a comprehensive regulatory framework.

The Importance of Basic Theory

The basic theory underlying generative artificial intelligence involves mathematical principles such as statistics, calculus, and probability, along with the architecture of neural networks (NN). Understanding these foundational elements is essential for identifying and mitigating bias in AI systems.

Regulatory efforts should extend to the original concepts of neural networks, aiming to identify biases embedded in the foundational theories and work towards creating models with reduced inherent bias.

Future Considerations for Regulation

As the discourse surrounding AI legislation evolves, several pressing questions arise:

- Which stages of the AI lifecycle should be regulated, and which should remain unregulated?

- Should the behaviors of research scientists be subject to legislation and oversight?

- How can basic theory be regulated without stifling innovation in computer science?

Governments worldwide are urged to observe the EU’s implementation of the AI Act and to consider expanding the scope of AI legislation to encompass basic theory and architecture design carefully. Maintaining a balance between regulation and innovation will be vital as AI continues to evolve.