6 Cybersecurity Trends Shaping Governance and AI Adoption in 2026

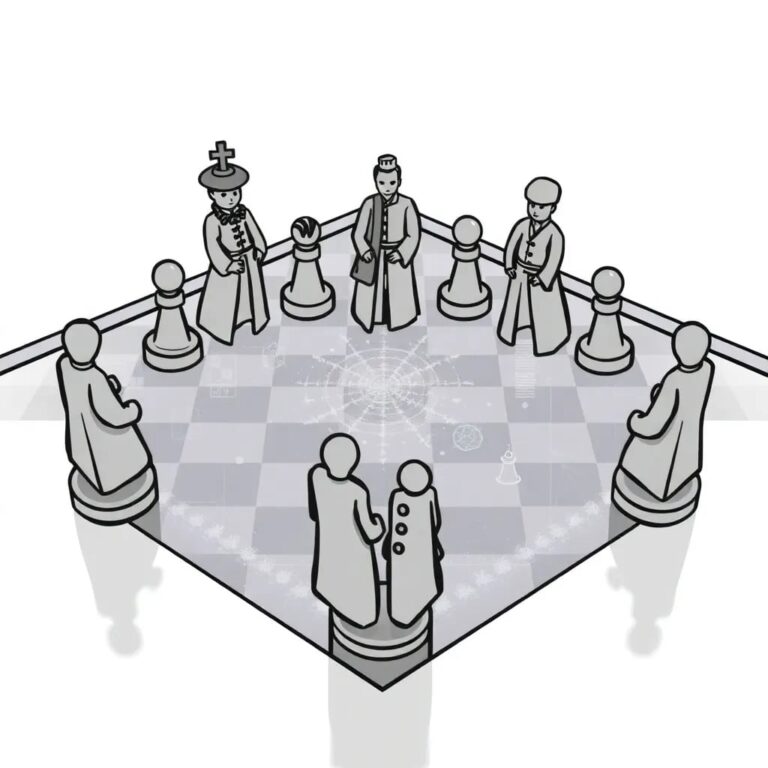

The rapid rise of AI, escalating geopolitical tensions, regulatory uncertainty, and an increasingly complex threat landscape are reshaping cybersecurity trends for 2026, as highlighted in a recent report.

Agentic AI Demands Cybersecurity Oversight

Agentic AI is being adopted at speed by both employees and developers, creating new attack surfaces. The emergence of no-code and low-code tools, alongside vibe coding, is accelerating this shift, leading to the proliferation of unmanaged AI agents, insecure code, and heightened regulatory compliance risks.

While these AI agents and automation tools are becoming increasingly accessible, strong governance remains essential. Cybersecurity leaders must identify both sanctioned and unsanctioned AI agents, enforce robust controls for each, and develop incident response playbooks to address potential risks.

Postquantum Computing Moves into Action Plans

Advances in quantum computing are predicted to make the asymmetric cryptography organizations rely on unsafe by 2030. To avoid potential data breaches and financial losses from “harvest now, decrypt later” attacks targeting long-term sensitive data, organizations must adopt postquantum cryptography alternatives now.

This shift is reshaping cybersecurity strategies by prompting organizations to identify, manage, and replace traditional encryption methods while prioritizing cryptographic agility. Investing in these capabilities now will secure assets when quantum threats become a reality.

Identity and Access Management Adapts to AI Agents

The rise of AI agents presents new challenges to traditional identity and access management (IAM) strategies, particularly in identity registration, governance, credential automation, and policy-driven authorization for machine actors. Ignoring these challenges could lead to increased access-related cybersecurity incidents as autonomous agents become more prevalent.

Organizations are advised to take a targeted, risk-based approach by investing where gaps and risks are greatest while leveraging automation to secure critical assets in AI-centric environments.

AI-Driven SOC Solutions Destabilize Operational Norms

Fueled by cost-optimization efforts and growing interest in AI, the rise of AI-enabled Security Operations Centers (SOCs) introduces new layers of complexity. While these technologies enhance alert triage and investigation workflows, they also escalate staffing pressures, necessitating upskilling and reshaping cost structures around AI tools.

To maximize the potential of AI in security operations, cybersecurity leaders must prioritize people alongside technology, strengthening workforce capabilities and implementing human-in-the-loop frameworks in AI-supported processes.

GenAI Breaks Traditional Cybersecurity Awareness Tactics

Existing security awareness initiatives have proven inadequate in mitigating cybersecurity risks, especially as GenAI adoption accelerates. A survey indicates that over 57 percent of employees use personal GenAI accounts for work, with 33 percent admitting to inputting sensitive information into unapproved tools.

To address this, it is recommended to shift from general awareness training to adaptive behavioral training programs that include AI-specific tasks. Strengthening governance and establishing clear policies for authorized use will help reduce exposure to privacy breaches and intellectual property loss.