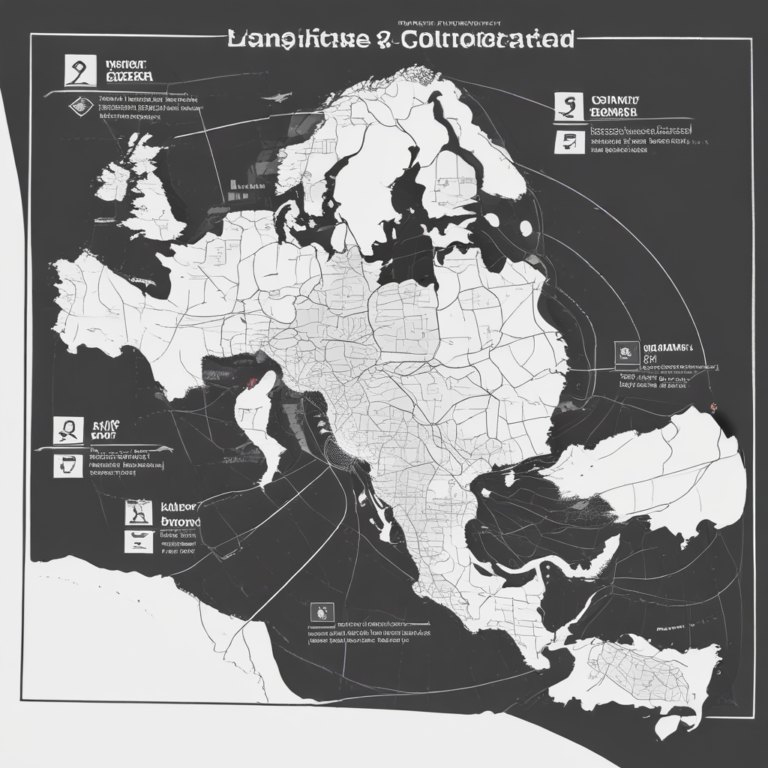

EU AI Act Deadlines and Compliance Overview

The European Union’s Artificial Intelligence Act (EU AI Act) represents a significant legislative effort aimed at regulating AI systems throughout the EU. This landmark legislation is designed to ensure safety, transparency, and accountability in the deployment of AI technologies. The Act was officially enacted on August 2, 2024, and its implementation will occur over several years, with staggered deadlines that companies must adhere to.

Key Deadlines and Compliance Requirements

Understanding the critical deadlines outlined in the EU AI Act is essential for businesses involved in AI development and deployment. Below is a detailed breakdown of these deadlines along with compliance requirements:

1. February 2, 2025: Prohibited Practices

What to Comply With: The EU AI Act prohibits certain AI practices deemed harmful or manipulative. These include systems that exploit vulnerabilities or distort human behavior, such as public biometrics and social scoring. Companies must ensure their AI systems do not engage in any of these prohibited activities.

2. August 2, 2025: General-Purpose AI (GPAI)

What to Comply With: Providers of General-Purpose AI models must meet specific transparency obligations. This includes maintaining comprehensive documentation of their models and datasets. All developers of large language models (LLM) and generative AI (genAI) foundational models are categorized under this requirement.

3. August 2, 2026: High-Risk AI Systems

What to Comply With: High-risk AI systems, such as those utilized in healthcare or transportation, will be subjected to stricter regulations. Compliance includes implementing cybersecurity measures, establishing incident response protocols, and maintaining evaluation records for AI models.

4. August 2, 2026: Limited-Risk AI Systems

What to Comply With: Limited-risk AI systems, which may include applications in irrigation, agriculture, and customer service, will face milder regulatory requirements. Companies must label AI-generated outputs as ‘artificial’, provide a summary of the data used, and prepare a ‘model card’ detailing the model utilized.

5. August 2, 2027: Additional High-Risk AI Requirements

What to Comply With: Further requirements for high-risk AI systems will come into effect, particularly for those involving safety components, including medical devices and products manufactured for children.

Conclusion

As the EU AI Act unfolds, organizations must remain vigilant and proactive in understanding their obligations under this legislation. By staying informed about deadlines and compliance requirements, companies can navigate the complexities of AI regulation and ensure their systems are aligned with EU standards.