AI Governance for Developers: A Practical Guide

Decades ago, concepts like unit testing, version control, and security protocols weren’t standard practice in software development. Today, they are non-negotiable parts of a professional developer’s toolkit. We are at a similar turning point with artificial intelligence. AI Governance for AI Developers is the next evolution in our craft. It’s the structured approach to ensuring the systems we build are not only powerful but also ethical, compliant, and safe. This isn’t about adding bureaucratic red tape; it’s about applying engineering discipline to the unique challenges of AI. This guide breaks down governance into practical, actionable steps that integrate directly into your existing workflow, just like any other essential development practice.

Key Takeaways

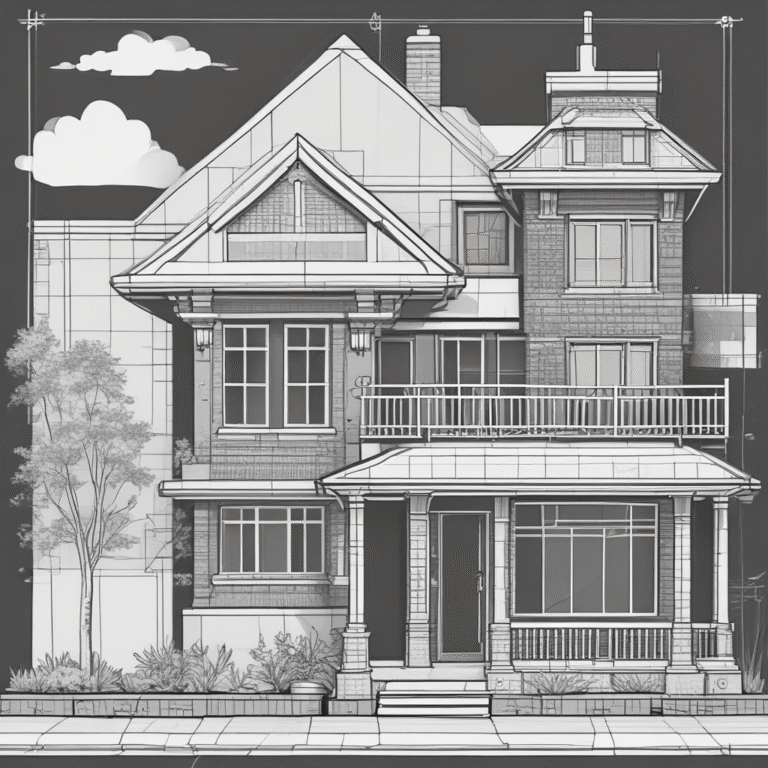

- Governance Is a Framework for Control, Not a Barrier: Think of AI governance as the architectural blueprint for your work. It provides the clear rules and structure needed to build with precision and authority, turning abstract principles into a concrete plan for creating reliable, compliant systems.

- Fairness by Design – Developers Set the Baseline: As a developer, you are the first line of defense against risk. While legal teams can audit for issues, you have the power to prevent them from being built into the system in the first place. Owning this responsibility is how you build AI that is safe by design.

- Automate Governance to Maintain Momentum: Integrate responsible practices directly into your development lifecycle using automated tools. Platforms that handle monitoring, risk assessment, and documentation make governance a seamless part of your workflow, not a final hurdle that slows you down.

What is AI Governance for Developers?

AI governance is the set of guardrails that ensures artificial intelligence is developed and used safely, ethically, and in compliance with regulations. For you, the developer, this isn’t just abstract corporate policy. It’s the practical framework that guides how you design, build, and deploy AI systems responsibly. Think of it as the rulebook that helps you create powerful tools while minimizing risks like bias, privacy violations, and unpredictable behavior.

A strong governance approach doesn’t slow you down; it provides a clear path forward, allowing you to create with confidence. It’s about establishing the right processes and standards so that responsible development becomes second nature, not an afterthought. This structure is what separates successful, scalable AI from projects that get stuck in review cycles or, worse, cause real-world harm. By understanding and applying these principles, you move from simply writing code to architecting trustworthy systems.

Core Components of a Governance Framework

Think of a governance framework as the operating system for your company’s AI strategy. It’s a system of rules, plans, and tools that brings everyone together—from data teams and engineers to legal and business leaders. A solid framework makes your AI models more reliable and predictable, reduces legal and compliance risks, and brings clarity to how automated decisions are made.

Your Role in AI Governance

While your CEO and senior leaders are ultimately accountable for the company’s AI strategy, your role as a developer is absolutely critical. You are on the front lines. Because you build, train, and test the models, you have a direct hand in making sure AI is fair and functions as intended. Legal and audit teams can check for risks and biases, but the AI developer is best equipped to prevent them from being coded into the system in the first place.

How Governance Shapes the Development Lifecycle

AI governance isn’t a final step or a compliance hurdle to clear before launch. It’s a set of practices woven into every stage of an AI system’s life. From the initial concept and data collection through development, testing, deployment, and ongoing management, governance provides the structure for making responsible choices. These frameworks and policies are what turn abstract ethical principles into concrete actions within your workflow.

Core Principles of AI Governance

- Transparency and Explainability: Transparency means being open about where you use AI, what it does, and how your AI models are used, while explainability is your ability to describe why a model made a specific decision in plain language.

- Fairness and Bias Mitigation: Fairness in AI means your models don’t create or perpetuate biases against certain groups. Your role is to actively identify and mitigate these biases through careful examination of your training data.

- Privacy and Data Security: AI models are data-hungry, making privacy and data security essential. Protect personal information at every stage of data handling.

- Clear Accountability: Define ownership for the AI’s actions and outcomes to establish clear lines of responsibility for monitoring and managing AI systems.

- Foundational Ethics: Embed a set of core values—like safety, human well-being, and societal impact—into your work.

How to Implement AI Governance

Putting AI governance into practice is less about creating a rigid rulebook and more about building a strong, flexible system. It’s a structured approach that integrates responsible practices directly into your development lifecycle. By breaking it down into clear, manageable steps, you can build a foundation for AI that is not only powerful but also trustworthy and compliant.

Establish Your Governance Framework

Your first step is to establish a governance framework. This framework outlines the core principles, policies, and practices that guide how you build and deploy AI systems. It should clearly define roles and responsibilities and the ethical lines you won’t cross.

Define Documentation Standards

Clear and consistent documentation is the backbone of good governance. Agree on what information to record for every model developed, including its purpose, datasets used, and performance metrics.

Create Testing and Validation Protocols

Before any high-risk model goes live, it needs to undergo rigorous testing that looks for potential issues like bias and security flaws. Standardizing these tests creates a repeatable process for every project.

Align with Your Stakeholders

AI governance is a team sport. Work closely with a diverse group of stakeholders to ensure your AI systems align with broader company goals and values.

Set Up Continuous Monitoring

AI systems can change over time, making continuous monitoring essential. Regularly track your model’s performance and set up automated alerts for critical issues.

Overcome Common Implementation Challenges

One challenge is that AI technology often moves faster than the frameworks designed to govern it. Look for governance platforms with flexible APIs that integrate smoothly into your existing workflows.

Conclusion

Effective AI governance isn’t a final hurdle to clear before deployment; it’s a thread you weave through every stage of the development lifecycle. By integrating governance from the start, you create a more robust, ethical, and compliant system.